Research Area

5.4 Information presentation technology

To convey information to all viewers including those with vision or hearing impairments in an easy-to-understand manner, we made progress in our research on a technology to generate sign language computer graphics (CG) from sports information and a technology to convey the status of sports events and motion information to tactile sensation. We also began research on information presentation using the sense of smell.

■Sign language CG for presenting sports information

To enrich broadcasting services for viewers who mainly use sign language, we are researching a technology for automatically generating sign language animations using CG for information about sports events.

We developed a system that automatically generates sign language CG and Japanese closed captions by combining competition data delivered during a game and templates prepared in advance and displays them on the web browser in synchronization with the game status. We conducted experiments on the real-time automatic generation and presentation for ice hockey and curling matches and exhibited the results at the NHK STRL Open House 2018. Evaluation experiments using the generated content demonstrated guidelines for the information that should be presented and a screen layout in actual service (Figure 5-3)(1).

In our research on machine translation from Japanese sentences to sign language CG for sports news, we prototyped an automatic translation system using a syntax transfer method, which changes the order of words by converting Japanese syntactic structures to sign language ones, to support sentences with complicated syntactic structures. The conversion of syntactic structures uses data applied with machine learning from the results of Japanese syntax analysis and the results of sign language syntax analysis that we developed in FY 2017. We also developed a sign language CG production assistance system that allows the user to modify translation errors manually by changing the order of sign language words or replacing an incorrect word with a correct one. The results of evaluation experiments using sign language CG animations generated by this system demonstrated the need for a function to appropriately present the words or phrases to be replaced for efficient modification(2). We also began developing a function to add appropriate facial expressions in accordance with the impression of the context and words and studying evaluation experiments on the production assistance system assuming actual operation.

With the aim of expanding the parallel corpus necessary for improving the accuracy of machine translation, we began R&D on a technology to convert sign language video into text using image recognition. We verified previous studies on sign language recognition using deep learning and conducted evaluation experiments using training data for Japanese Sign Language (JSL). We confirmed the effectiveness of deep learning and obtained knowledge about training data necessary for improving the recognition rate of JSL.

We have been gathering user feedback about the understandability of our weather report sign language CG for the Kanto Region via an evaluation website that we released in NHK Online in February 2017. To expand the coverage area of scheduled forecasts from the prefectural capitals of the seven prefectures in the Kanto Region to those of the nation's 47 prefectures, we prototyped a weather report sign language CG generation system and verified its operation. Part of this study was conducted in cooperation with Kogakuin University.

Figure 5-3. Example of the screen layout of sports sign language CG service

■Haptic presentation technology for touchable TV

We are researching a technology for conveying the information of movements in video to people's skin. Our main purpose is to convey information such as the moving directions of a ball and players and the timings when the ball hits the floor, wall or racket in fast-moving sports content that is difficult to convey with speech information. For the tactile presentation of motions and timings, we decided to use three types of stimuli, vibration, sliding and acceleration. In FY 2017, we identified fundamental conditions for the perception and discrimination of these stimuli and demonstrated the feasibility of conveying motions and timings. In FY 2018, we conducted experiments by artificially adding tactile stimuli to the video and speech of virtual sports content to verify whether it is possible to understand the flow of the game and which team has scored a point. The experiments, participated by visually impaired people, demonstrated that it is possible to understand the game status even without visual information. An experiment in which the ball moving back and forth in tennis was presented by sliding, the linear movement of a pressure stimulus to the skin, identified perception and recognition characteristics such as the difficulty in conveying the changes of motion speed while demonstrating the feasibility of conveying the direction of ball motion.

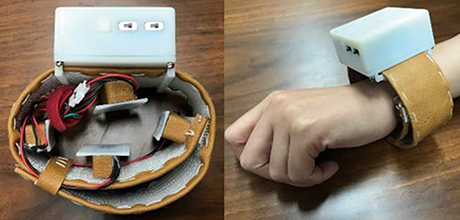

We developed a wristband haptic device that gives stimuli to the wrist using four vibrators through such improvement of the cube vibration device developed in FY 2017 as unnecessary to hold it by hand (Figure 5-4)(3). Using this device, we expressed player actions in volleyball (serve, receive, set, attack) and the ball hitting in or outside of the line by vibration. The results of evaluation experiments participated by people with visual impairment and people with hearing impairment demonstrated that a certain level of information can be conveyed.

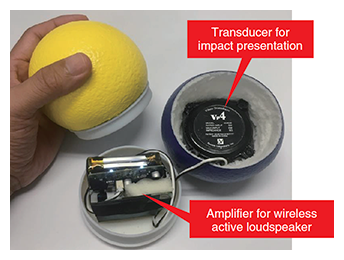

For an experiment on simulating and conveying impact by using vibration with large acceleration, we developed a ball-type haptic device that presents vibrations on the palm (Figure 5-5) and confirmed the possibility of understanding the game status without visual information by varying and combining parameters such as the size of amplitude to be presented and the volume and type of sound information(4). We plan to continue our research to achieve more enriched content representation using AR/VR technology and real-time presentation from sports event information. Part of this research was conducted in cooperation with the University of Tokyo and Niigata University.

In our research on a technology for effectively presenting 2D information that is difficult to describe in words, such as diagrams and graphs, to people with visual impairment, we continued with our development of a finger-leading presentation system with a tactile display. This system combines a tactile display that conveys information using the unevenness and vibrations of pin arrays that move up and down and a method for conveying important points by leading fingers with a kinetic robot arm(5). In FY 2018, we conducted development to enable the system to be used for many purposes, including helping visually impaired people learn characters and assisting deaf-blind people with their communication. A common way to convey characters to visually impaired people who have not mastered braille or deaf-blind people is for a caregiver to trace characters with his/her finger on the deaf-blind person's palm. We developed a method for guiding the user's finger according to the stroke order of characters and demonstrated through evaluation experiments that this method achieves a higher character recognition rate than that of character-tracing on the palm. This showed that this system is effective not only as a learning tool but for helping deaf-blind people with their communication. Part of this research was conducted in cooperation with Tsukuba University of Technology.

Figure 5-4. Appearance of wristband haptic device

Figure 5-5. Internal structure of ball-type haptic device

■Olfactory information presentation method

To provide richer viewing experiences, we began research on an olfactory information presentation method. We investigated previous studies and the latest trend of olfactory information presentation technologies and studied broadcast content for which the addition of olfactory information is effective. Also, we began studying a method for effective olfactory information presentation with simultaneous presentation of video.

| [References] | |

| (1) | T. Uchida, H. Sumiyoshi, T. Miyazaki, M. Azuma, S. Umeda, N. Kato, Y. Yamanouchi and N. Hiruma: "Evaluation of the Sign Language Support System for Viewing Sports Programs," International ACM SIGACCESS Conference on Computers and Accessibility, pp.361-363 (2018) |

| (2) | S. Umeda, N. Kato, M. Azuma, T. Uchida, N. Hiruma, H. Sumiyoshi and H. Kaneko: "Sign Language CG Production Support System to Modify Signing Expression with Machine Translation," Human Communication Group Symposium, A-7-5 (2018) |

| (3) | M. Azuma, T. Handa, T. Shimizu and S. Kondo: "Hapto-band: Wristband Haptic Device Conveying Information," Lecture Notes in Electrical Engineering, Vol.535 (3rd International Conference on Asia Haptics), Kajimoto, H. et al eds., Springer, D2A17 (2018) |

| (4) | T. Handa, M. Azuma, T. Shimizu and S. Kondo: "A Ball-type Haptic Interface to Enjoy Sports Games," Lecture Notes in Electrical Engineering, Vol.535 (3rd International Conference on Asia Haptics), Kajimoto, H. et al eds., Springer, D1A16 (2018) |

| (5) | T. Sakai, T. Handa, T. Shimizu: "Development of Prop-Tactile Display to Convey 2-D Information and Evaluation of Effect -Affection of Presentation Conditions on Powered Mechanical Leading Method for Spatial Position Cognition-, IEICE Trans. Information and Systems, Vol.J101-D, No.3, pp.628-639 (2018) |