Research Area

4.4 New image representation technique using real-space sensing

With a view to producing more interesting and user-friendly video content efficiently for live sports coverage and other live programs, we are researching new image representation technologies and advanced program production technologies using multiview images and sensor information.

■New image representation by visualization technology

We researched new image representation using an object-tracking technology and an area extraction technology based on video analysis and sensor information.

As new image representation using an object-tracking technology, we continued with our research on a "sword tracer" system that visualizes the movements of the tip of a sword in fencing in CG. The sword tracer system measures the positions of the two sword tips wrapped with reflective tape in real time by applying the object-tracking technology to video captured with an infrared camera(1). In FY 2018, we made the tracking process more robust by distinguishing the two sword tips of both players using their attitude information obtained by analysis of visible camera images.

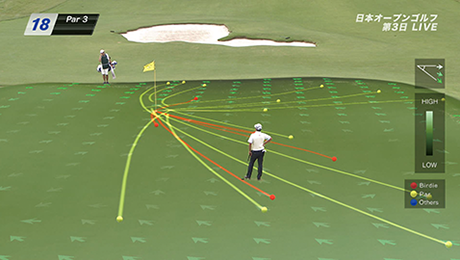

We researched a technology for visualizing the trajectories of a putt and a shot in golf events. In FY 2018, we developed a system that visualizes the putting trajectory in CG by detecting and tracking a golf ball in real time from images captured with a camera installed near the green. The system achieved tracking process that can robustly accommodate environmental changes by sequential learning the image features of a ball by frame. We used this system for a live program "The 83rd Japan Open Golf Championship," aired from October 11 to 14, 2018 (Figure 4-5). For shots, we achieved the superimposition of trajectory CGs on images from a handy camera, whose attitude changes, in addition to images from a fixed camera by using sensors for measuring the camera attitude angle.

We are researching a "multi-motion" system that extracts an athlete region from camera images and shows the athlete's motion with stroboscopic effect. In FY 2018, we increased the speed of generating images by optimizing the program and improved the operation interface assuming its use for commentaries in programs. This system was used in commentary scenes(2) of the live broadcasting of "The 60th NHK Trophy National Ski Jump Tournament," aired on November 4, 2018 (Figure 4-6).

We continued with our development of "Sports 4D Motion," a new image representation technology that combines multiview images and 3D CGs. In FY 2018, we developed an online processing system for Sports 4D Motion(3). This system can generate Sports 4D Motion images within several tens of seconds after the completion of capture by high-speed image processing, such as projective transformation of 4K multiview images, and 3D CG synthesis. We exhibited this system at the NHK STRL Open House 2018 and also confirmed its effectiveness by conducting performance evaluation through demonstration experiments.

Figure 4-5. Display of the golf putting trajectory at the 83rd Japan Open Golf Championship

Figure 4-6. Multi-motion display at the 60th NHK Trophy National Ski Jump Tournament

■Advanced live program production technology

We began research on key technologies for "meta-studio" to achieve new video representation and improved efficiency in live program production by utilizing various object information obtained from images and sensors.

In our research on automatic program production technologies, in FY 2018, we began developing an AI robotic camera that performs automatic shooting in accordance with the situation. As a scene analysis technology for soccer events, we prototyped software that enables the conversion of the subjects into objects and the simultaneous extraction of image features such as the positions and velocities of players and a ball, players' face directions and the colors and types of their shirts. We improved the accuracy of face direction estimation and increased the speed for online processing by introducing a classifier using deep convolutional neural networks to a method that we previously developed. We also developed a rule-based camera work generation algorithm on the basis of shooting know-how that we learned from experienced camera persons for live program production. In addition, we verified the operation of automatic camera work along with a robotic camera simulator using cutout from 8K video.

We made progress in our research on a studio robot that enables natural joint performance between live performers and CG characters in a studio. In FY 2018, we developed a real-time unwanted object removal technology to hide unnecessary parts when the robot is too large to be completely hidden behind the CG superimposed on the robot position in the video. Removing an unwanted object requires the background information such as the structure and surface pattern behind the unwanted object and the position and attitude information of the camera. This technology measures the background information in advance as a CG object and stores it in the CG rendering device. During capturing, it generates a CG of the object under the same position and attitude conditions as the camera and thus generates background images in which the unwanted object does not exist. It then replaces the area of the unwanted object in photographed images with the CG background images using the robot position information. This allows only the unwanted object to be removed in real time.

We developed a general-purpose camera attitude sensor that can be used for various capture equipment such as handy cameras and crane cameras. In FY 2018, we prototyped a system that measures the camera attitude angle with a micro-electro-mechanical-systems (MEMS) inertial sensor and a fiber optic gyroscope (FOG), the horizontal position with a laser sensor, and the height with an RGB-D sensor, and conducted verification experiments. We demonstrated that these sensors equipped on a camera can stably measure real-time data required for CG synthesis.

| [References] | |

| (1) | M. Takahashi, S. Yokozawa, H. Mitsumine, T. Itsuki, M. Naoe, and S. Funaki: "Sword Tracer: Visualization of Sword Trajectories in Fencing," Proc. of the ACM SIGGRAPH 2018 Talks (2018) |

| (2) | A. Arai, H. Morioka, H. Mitsumine, D. Kato: "Development of Multi-Motion System Considering Live Production," Proc. of ITE Winter Annual Convention, 13D-4 (2018) (in Japanese) |

| (3) | K. Ikeya, J. Arai, T. Mishina, M. Yamaguchi: "Capturing method for integral three-dimensional imaging using multiviewpoint robotic cameras," J. Electron. Imaging 27(2), 023022 (2018) |