Research Area

3.2 Device linkage services

We made progress in our research on device linkage services that will offer new TV experiences in various scenes of daily life by taking advantage of the internet and linking TV with various terminals such as IoT (Internet of Things)-enabled devices.

■Hybridcast Connect X

To connect daily activities with broadcasting services more easily, we are developing Hybridcast Connect X, which enables viewers to start the interaction with Hybridcast applications on a TV from a smartphone or IoT-enabled devices that they use every day.

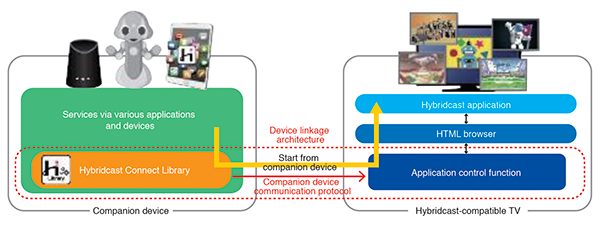

We developed a device linkage protocol to connect TV with IoT-enabled devices (Figure 3-5)(1), which was then released by the IPTV Forum Japan as a technical standard. As concrete use cases of the device linkage protocol, we demonstrated this protocol implemented into news, SNS and other smartphone applications (apps) and applications for interactive smart speakers at the NHK STRL Open House 2018 and the International Broadcasting Convention (IBC) 2018. The demonstration showed that the implementation of this protocol makes it possible to start the linkage with broadcasting service from smartphone applications and notification functions in daily use and thus enables services that offer new user experiences(2).

In addition, we developed a software module called "Hybridcast Connect Library"(3) that allows easy implementation of this protocol into smartphone applications and IoT-enabled devices and a test tool to verify the protocol operation. We exhibited them at various exhibitions such as the NHK STRL Open House 2018 and Inter BEE 2018 and also helped the IPTV Forum Japan to hold workshops to promote this technology.

Figure 3-5. Device linkage protocol and Hybridcast Connect Library

■ Linkage of TV viewing with daily activities

With the aim of realizing more convenient broadcastbroadband services that connect TV viewing with internet services and daily activities outside of TV viewing, we are conducting R&D on a content-matching technology, which is a key technology for linking broadcast content with the data of various applications and IoT-enabled devices.

At the NHK STRL Open House 2018, we exhibited multiple use cases of Hybridcast Connect Library such as linkage between a smartphone application and broadcasting in cooperation with commercial broadcasters and manufacturers and demonstrated the feasibility of service enhancement and the improvement of convenience by data linkage with applications and IoT-enabled devices (Figure 3-6).

To enable viewing data to be utilized for various services under the management of individual viewers, we investigated the trends in personal data management methods and examined those methods. As an example of service linkage among different industries, we prototyped a system that allows the information of programs watched and places visited during a drive to be shared among various services such as broadcasting and a car navigation service with the viewer’s wish and exhibited it at the NHK STRL Open House 2018.

We conducted field experiments using actual TV programs in Tokyo and Hokkaido and identified expectations and challenges for providing services to link between TV experience and life activities through questionnaire- and interview-based evaluations.

We also studied a way of structuring program data and making it open. We prototyped a calendar application incorporating the linkage with program information and a function to interact with TV using Hybridcast Connect Library, and verified the feasibility of expanding access to program viewing and issues of content-matching technology.

We actively promoted examples of linkage between TV and smartphones, which are the outcomes of our research on content-matching technology, domestically and internationally through exhibitions such as Connected Media Tokyo 2018 and IBC 2018. We also proposed content-oriented IoT, a technology for controlling various IoT-enabled devices in synchronization with content, and researched content description methods(4).

Figure 3-6. Exhibit of content-matching technology at NHK STRL Open House 2018

■Framework for producing content capable of service linkage

To construct a highly flexible and extensible content production environment by combining various media processes, we participated in the EBU Media Cloud and Microservice Architecture (MCMA) technical project that specifies common interfaces. We contributed to the development and publication of a software library that can be used for constructing an MCMA-compliant system on the cloud. We built a metadata tagging system that can link AI tools across multiple clouds by using the library and exhibited it at IBC 2018 as an MCMA activity group(5).

■International promotion of Hybridcast

We are developing a system for producing equivalent applications that operate on both Hybridcast and HbbTV2, which are HTML5-based Integrated Broadcast- Broadband (IBB) systems used in Japan and Europe, respectively. In FY 2018, we tested feasibility of the system by creation of test applications by this system for various aspects. The application is designed to test multilingual support, dynamic graphics rendering and MPEG-DASH playback and the good performance and behavior of the applications is confirmed. In addition, we identified the conditions of the use of Hybridcast libraries to HbbTV2 in MPEG-DASH playback (Hybridcast (4K) video) through the detailed investigation of its applicability. We also presented our equivalent application production system at the HbbTV Symposium, a developer conference for HbbTV, and built cooperative relationships with research organizations in Europe. Additionally, we gave presentations on web technologies for Hybridcast Connect X and Hybridcast (4K) video at the World Wide Web Consortium (W3C).

■TV-watching robot

We are researching a robot that watches TV with a viewer, serving as a partner to make TV viewing more enjoyable. We developed a TV-watching robot that has functions to understand the program being viewed, generate utterances autonomously and talk to the viewer and exhibited it at the NHK STRL Open House 2018.

In FY 2018, we developed a template-based utterance text generation method that generates an utterance text by extracting keywords from the program being viewed and combining them with a template sentence containing emotional expressions prepared from past subtitles(6)(7). We also added a function to generate a question that can be answered with a simple word such as "yes" or "no" to encourage the viewer’s utterance. In addition to the template-based utterance text generation, we began studying a way to generate an utterance text automatically from program images. In FY 2018, we implemented a method for generating an utterance text from the images of the program being viewed by using an object detection technology using deep neural networks and a caption generation technology.

We also developed a cloud interface for using video and speech recognition services in external cloud environments to extract keywords from the program being viewed. This makes it possible to use different cloud infrastructures in a cross-sectoral manner and convert the outputs to a common format, enabling the selection of services to use according to the purpose of process. Moreover, the use of sequential-parallel processing achieved response within a certain delay time.

To realize dialogs triggered by utterances generated by the robot, we equipped our TV-watching robot under development with an external dialog system. We also organized issues of a speech-based conversation system, which involves the absence of clear response from the user and speech recognition errors, unlike a text-based dialog system.

For the robot to initiate an action to a person around during TV viewing, the recognition of a person and the timing of action are important. Images that can be captured with a camera used for recognizing a person vary greatly according to the angle of view, the installation position in the robot and the presence or absence of a rolling mechanism. We therefore conducted a comparative verification of person detection capability using images of multiple cameras including a fisheye camera, camera array and a camera mounted on the robot’s head, and obtained knowledge about characteristics of each type.

Part of this research was conducted in cooperation with KDDI Research, Inc.

■Viewing experiments using TV-watching robot

We conducted TV viewing experiments to identify the difference between conversations between persons and conversations involving a robot during TV viewing. We asked two people on good terms to watch any program they like freely and observed their viewing behavior for cases with and without a TV-watching robot(8). Using the observation data, we investigated the listening behavior of people in response to different types of utterances and the robot’s motion of turning round. The results demonstrated that it was easier to get a response from people when the robot turned round and talked to them and that people often made utterances expressing their feelings while watching TV programs.

Figure 3-7. Scenes of viewing experiments using TV-watching robot

■Questionnaire survey on communication robots

To develop a robot that watches TV in the viewer’s company, we conducted a market survey by questionnaire to understand to what extent communication robots are recognized and accessed by general public. The survey results showed that 86% of respondents think of robots as something that support people, that there are still only a few opportunities to actually make contact with robots, and that people of higher age are more likely to expect a human-shaped robot(9).

| [References] | |

| (1) | H. Ohmata and M. Ikeo: "User-Centric Companion Screen Architecture for Improving Accessibility of Broadcast Services," IBC2018 Conference (2018) |

| (2) | H. Ohmata, M. Ikeo, H. Ogawa, C. Yamamura, T. Takiguchi and H. Fujisawa: "Companion Screen Architecture and System Model for Smooth Collaboration between TV Experience and Life Activities," IPSJ Journal, vol.60, no.1, 2019, p.223-239 (2018) (in Japanese) |

| (3) | K. Hiramatsu, H. Ohmata, M. Ikeo, T. Takiguchi A. Fujii and H. Fujisawa: "A Proposal of Hybridcast Connect Library to enable Flexible Service Provision," Proc. of the ITE Annual Convention, 32D-3 (2018) (in Japanese) |

| (4) | H. Ogawa, H. Ohmata, M. Ikeo, A. Fujii and H. Fujisawa: "System Architecture for Content-Oriented IoT Services," IEEE International Conference on Pervasive Computing and Communications (2019) |

| (5) | https://tech.ebu.ch/docs/metadata/MCMA_IBC2018_Final.pdf |

| (6) | Y. Kaneko, Y. Hoshi, Y. Murasaki and M. Uehara: "Utterance Generation based on Keywords of TV Programs," IPSJ SIG Technical Reports, Vol.2018-IFAT-131, no.2 (2018) (in Japanese) |

| (7) | Y. Kaneko, Y. Hoshi, Y. Murasaki and M. Uehara: "A Study on Estimating Alternative Word of Unknown Keyword from Surrounding Words," Proc. of the ITE Annual Convention, 14B-1 (2018) (in Japanese) |

| (8) | Y. Hoshi, Y. Kaneko, Y. Murasaki and M. Uehara: "Basic Survey on TV Watching with Robot by TV Watching Experiment," Proc. of the ITE Annual Convention, 14B-2 (2018) (in Japanese) |

| (9) | Y. Murasaki, Y. Kaneko, Y. Hoshi and M. Uehara: "A Study on Impressions of Communication Robots," IPSJ SIG Technical Reports, Vol.2018-EIP-81 No.13 (2018) (in Japanese) |