|

Kazuhisa IGUCHI,

Research Engineer,

Advanced Audio &

Video Coding |

I have been with NHK Science and Technical Research Laboratories since 1993. My research interest is image processing. I have been engaged in research on motion vector estimation and motion compensation for video standard conversion and object-based image processing. Since 1999, my research interest is scene description language. Existing scene description languages are principally for scene presentation to users. For reason of efficient program production, I think it is useful to apply scene description language to broadcast program production. If a standardized scene description language independent of specific hardware can be used in program production, it is possible for editors and studios to collaborate and easily modify or reuse existing image scenes. |

The digitalization of broadcasting increases the

availability of channels and the spread of the Internet is driving

demand for a wider variety of programming. STRL is developing

efficient program production technologies that can produce quality

programs in a fraction the time and cost of conventional production

systems. As part of this research, we developed a scene description

language called media object interlock and synthesis (MIKSS for

short and pronounced 'mix') that can describe the functions used

in image composition, video effects creation, and editing. The

development of this language was based on an examination of the

functions needed for video production.

The objects comprising a video scene, such as people and the background,

are called video components. In addition to indicating the display

position and time for each component, MIKSS makes it easy to describe

the video production functions. These functions include the video

editing processes, using an "IN" point (the starting point of

a video sequence) and an "OUT" point (the completion point of

a video sequence), the scene change processes (switching of a

video) such as wipe and dissolve (switching of a video by overlapping

it with another), and the video effects processes, including color

conversion and transformation. The language can also be used to

describe interactive video scenes, providing additional video

information whenever a user clicks a mouse or takes some other

action.

|

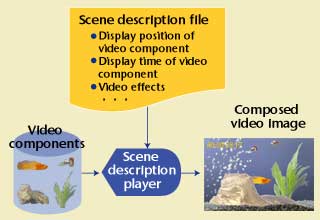

| Figure 1: Video composition by scene

description |

Figure 1 shows an example of video production using MIKSS. Moving

images, such as the fish and water plants of the figure, are the

video components. First, a scene description file containing the

video-component display positions and times is created. This file

is read by a scene description player, which displays a video

scene containing the described video components. The video image

can be easily modified, for example, replacing a video component

or moving a display position, by rewriting the MIKSS scene description

file.

Figure 2 shows a conceptual diagram of a video production system

in which video components are shared on a network. Each area enclosed

by broken lines comprises one editing system. The common scene

description language allows a producer to edit on any editing

system, as long as he or she has the scene description file. Since

a scene description file can be created on a simplified editing

system that handles only textual data, the costs for program production

facilities can be reduced.

Future improvements to the language will include adding functions

for video production via networks and making the video scenes

described by MIKSS available for use in data broadcasting and

on the Internet.

|

| Figure 2: Video production system using scene description language |

|