1.5 Audio Technologies

We are conducting R&D on technologies for next-generation audio services, including technologies to generate sound-source information, so that sounds can be represented at the viewer’s location as though they are at the location of the sound materials; technologies to generate a sound field that represents the differing distances between the listener and the sound sources; and an object-based audio technology that will enable implementation of services that allow viewers to customize program audio according to their playback environment and their preferences. We are also advancing standardization of these technologies both domestically and internationally.

Generating Sound-source Information and Sound-field Reproduction Technologies for Spatial Representation of Sound

We are conducting R&D on audio technologies to support reality imaging, focused on AR and VR.

To represent the changes in how a person’s voice sounds depending on which way the speaker is facing, we are developing methods to model human-voice 3D radiation characteristics. Using a database of human-voice 3D radiation characteristics that we created in FY2019, we studied methods to estimate sound pressure at unmeasured points using sound pressures at measured points, so that we showed that the accuracy of estimation does not depend greatly on the estimation method. We also conducted experiments to study whether listeners could distinguish between human voices radiated toward different directions, and they showed that the diffidence in 15 degrees is imperceptible and the diffidence in 30 degrees is perceptible but not annoying in most directions.

To reproduce sound continuously in 3D space at distances from nearby to far away using headphones, we are also researching methods to model the head-related transfer function (HRTF, propagation characteristics of the sound from sound source to both ears), including distance and direction. In FY2020, we studied methods for modeling in 3D space, adding a distance variable to the azimuth and elevation angle variables studied earlier. To control the distance and direction using a measured HRTF, so that a sound can be heard as though from any location in 3D space, we proposed a model in which we use high-order singular value decomposition to separate variables for distance, azimuth, elevation and frequency out of the measured HRTF data. In FY2019, we attempted the method using horizontal 2D HRTF measurement data, including distance, and confirmed that we were able to separate variables for direction, distance and frequency.

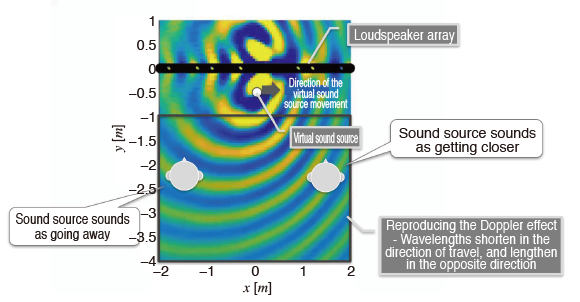

We are also researching how to implement sound representations in which the sound image leaps out from TV and gets closer to the viewer. In FY2020, for presenting such a sound image, we developed a spatial frequency domain model and reproduction method of a sound field generated by a moving sound source along an arbitrary path. We combined the developed model with a conventional sound field reproduction technology using a loudspeaker array and confirmed through a computer simulation that it is possible to reproduce the modeled sound field effectively. This technology was implemented, and we confirmed the effect with listening trials (Figure 1-17)(1).

Next-generation Broadcasting Formats with Object-based Audio

We are researching next-generation broadcasting formats using object-based audio, to implement next-generation audio services that will allow program audio to be customized according to the viewing environment and viewer’s preferences.

Object-based audio is a scheme in which materials, including sound signals and audio metadata, are broadcast and the receiver reconstructs the sound signals according to the playback environment at the receiver and the viewer’s preferences. One service envisioned for object-based audio is the ability to freely switch among sound objects such as commentary and background music at the receiver. As a way to produce sound objects to be switched efficiently, we are developing technology that uses the sound level of the main dialogue object as a reference, and automatically adjusts the level of alternative dialogue objects that they are switched in. In FY2020, we proposed an algorithm using a combination of multiple objective indices measured by different effective measurement durations in proportion depending on the similarity of utterance timing between the main dialogue and alternative dialogue to be switched. We also verified, through subjective evaluation, that sound adjusted using this method is closer to that produced by an audio engineer, compared with a method proposed in FY2019, which used a single objective index measured by a fixed effective measurement duration.

We also created a software implementation of algorithms that we proposed in FY2019 for object-based audio, to measure objective loudness using objective indices so that sound levels can be equalized between programs, and tested it.

We began developing a mixing console for producing live programs using object-based audio (Figure 1-18). In FY2020, we implemented functions to load the audio metadata describing the program structure etc., to output the audio metadata synchronized with sound signals that is required for signal processing at the time and extracted from the original audio metadata, and to render the sound signals according to the monitor loudspeaker arrangement. We then conducted tests connecting it to the audio metadata transmission equipment developed in FY2019. We conducted joint tests with the European Broadcasting Union (EBU) to check compatibility between devices, consolidated specifications for live production including video, and made improvements to our audio metadata transmission equipment according to the specifications(2).

For object-based audio high-quality and efficient audio coding algorithms, we developed real-time MPEG-H 3D Audio (3DA) codec equipment using the scheme proposed in FY2019 to allocate optimized bit rates for each sound object(3). We also developed a library implementing functions such as replacing the background sound object, and also outputting sound to a second device.

Anticipating future AR and VR services, we produced content with audio that supports changing the height of the viewpoint, prototyped a user interface supporting the requirements of an envisaged service, and exhibited it at the IBC2020 online event and others (Figure 1-19). We also proposed a method for representing differences in perceived direction of sound depending on distance when the listening position is changed, and conducted subjective evaluations to test its effectiveness.

To evaluate audio formats that also have a vertical component in their loudspeaker arrangements, such as 22.2 multichannel audio, we developed a compact monitor loudspeaker that satisfies the performance requirements of the international standard, Recommendation ITU-R BS.1116-3(4). By reducing the size of the aperture of the audio part of the loudspeaker unit, which uses strong magnets, we were able to reduce the volume to approximately 1/3 that of conventional loudspeakers, while maintaining output of more than double the maximum sound pressure levels, flat frequency-response characteristics, and directional characteristics required by international standards.

To improve the playback quality of low-frequency-effect (LFE) loudspeakers in FY2020, we measured the group-delay characteristics to study the performance of LFE loudspeakers, checked the difference in performance between products, and checked the effects of a high-cutoff-frequency filter on the group-delay characteristics with both numerical simulation and empirical measurements.

Standardization

We are advancing international and domestic standardization efforts for implementing next-generation audio services.

At the ITU-R, we have added use cases for object-based audio to Report ITU-R BT.2207 for the accessibility improvement, and Report ITU-R BT.2420 for the immersive media, and promoted discussion of object-based audio extensions to requirements for audio coding methods and loudness measurement algorithm. We also contributed editorial revisions to Recommendation ITU-R BS.2076 for the audio definition model (ADM), which defines the audio metadata used by object-based audio, and also to Recommendation ITU-R BS.2094 for the ADM common definition, and Recommendation ITU-R BS.2127 for the ITU ADM renderer.

At SMPTE, we contributed to improvements to the sound-file metadata standard, SMPTE ST 377-42, which enables exchange of content among workers in production, broadcasting and communications, adding loudspeaker labels and other aspects to support 22.2 multichannel audio.

At MPEG, we contributed to creation of an MPEG-H 3DA Baseline profile, summarizing specifications to better facilitate implementation of broadcast services using object-based audio.

At ARIB, we drafted domestic standards for a 64-bit audio file format supporting file sizes over 4 GB and object-based audio, as a next-generation audio file format to replace the current 32-bit audio file format. We also set up a new joint task group as requested by the Ministry of Internal Affairs and Communications (MIC), conducted a comparative study of audio coding methods supporting object-based audio, and presented an interim report to the Digital Terrestrial Broadcasting Format Advancement workgroup of the Information and Communications Council.

We have also taken on some of the duties involved in the MIC project to test technologies to address frequency bandwidth constraints, “Study regarding technical plans for effective utilization of broadcast frequencies (Study to implement efficient utilization of frequencies),” which has been contracted to the Association for Promotion of Advanced Broadcasting Service (A-PAB). This has included a survey of trends at international organizations, such as audio coding format proposals for MPEG-H 3DA Baseline profile, prototyping audio coding equipment conforming to standards, and verifying operation to check their potential for use in broadcasting applications.