Exhibition 15

Sign Language CG Generation Technology from Japanese News Script

To deliver more information in sign language

We are researching technology for generating sign-language computer graphics (CG) by inputting Japanese sentences and having a CG character reproduce those sentences in sign language. Here, we exhibit a system that generates sign language CG to convey input Japanese news sentences in a form that is easy for hearing-impaired individuals to understand.

Research Objectives

Among individuals born with hearing impairments, there are many that consider sign language to be their native language and there are not a few who desire information to be provided in sign language in addition to text in Japanese. We are researching technology for generating sign language for any sentence with the aim of providing easy-to-understand information even for individuals who consider sign language to be their native language.

Subject

Since sign language has no written culture, there are certain elements that take on great importance when converting sign language into CG animation. These include word order, of course, as well as rhythmic factors such as pauses and speed, mouth movements, and use of space for which there are no translations like those between spoken languages like Japanese and English. Consequently, a major issue in generating sign language CG is how to extract such important elements from Japanese plain text for expressing sign language and incorporating them into CG animation.

Up to now, researchers at NHK have been researching and developing sign language CG for providing commentary at sporting events, for weather reporting based on data from the Japan Meteorological Agency, etc.

These technologies, however, produce CG videos by first applying a motion-capture process to the sign language movements of many sentences under conditions in which conversational patterns are basically predetermined and then mixing and matching those movements as needed. Unfortunately, expressing sign language for text that differs greatly from those captured sentences has not been possible.

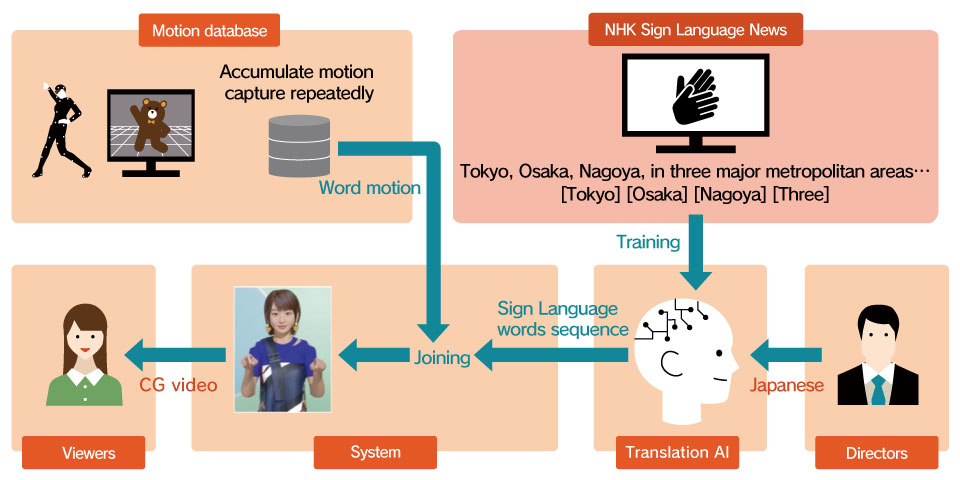

To remedy this problem, we have been researching technology for generating CG animation that learns parallel translations between Japanese and sign language collected from over ten years of NHK sign language news, translates Japanese into sign-language word strings, and then smoothly concatenates individual words that have been recorded by motion capture.

This is a video demonstrating how this technology is used to generate sign-language CG animation based on input Japanese.

Looking to the future, our aim is to establish technology that can perform sign-language conversion for any text. At this point in time, however, there are still many limits on what Japanese text can be translated. We are continuing our research with the aim of providing information that is easy for anyone to understand.

Detailed Explanations

The word order of sign language is different than the word order of Japanese, so it is necessary to translate Japanese into sign-language word strings to generate sign language CG from any Japanese. In this regard, research and development of text translation between spoken languages like Japanese and English is progressing, and it has come to be used in all sorts of scenarios within society. Couldn’t the same be possible in converting Japanese to sign language?

At NHK STRL, we are translating Japanese into sign-language word strings using a neural network model called a “transformer,” which can often be seen in text translation between spoken languages.

However, sign language CG cannot be immediately implemented solely on the basis of this technology due to the following difficulties characteristic of sign language:

- In Japanese, a sentence consists of a temporal sequence of individual words, but in sign language, two different words may be expressed simultaneously by the right and left hands.

- Sign language uses positions within space to express, for example, the time axis, the cardinal directions (north, south, east, and west), and the start/end points of an action.

- There are many expressions characteristic of sign language such as pointing, nodding, and shaking/tilting one’s head each with its own meaning.

- Even for a single hand or finger motion, meaning may change according to context in combination with facial expressions and mouth movements.

We have compiled a video detailing the flow from the mechanism of sign-language translation to CG generation.

Going forward, we are committed to overcoming these problems and implementing services one step at a time.